How to use Google Lens on your iPhone or iPad

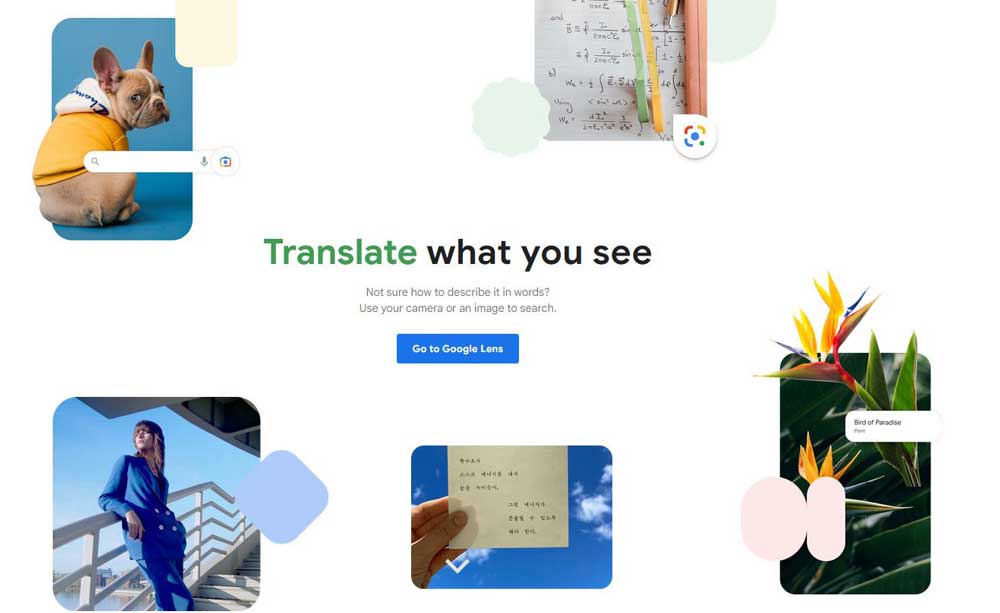

Use Google Lens on your iPhone or iPad and let your device do all the hard work for you, from solving tricky equations to identifying exotic plants

By techradar’s

Taking a moment to figure out how to use Google Lens on your iPhone or iPad is going to be well worth your time because trust us, this is one valuable image recognition tool that’s going to make your life a whole lot easier.

Since it’s official launch back in 2017, Google Lens has become one of our favorite AI-powered technology tools. Using your smartphone camera and deep machine learning, Google Lens can translate text, help you identify plants and find answers to equations – pretty cool, huh?

One of the best Android apps by far, Google Lens is also available on iOS devices and is incredibly easy to install. Whether you have the best iPad or the best iPhone, you’ll find Google Lens works beautifully on both.

Alongside the features mentioned above, this image recognition tool is also fantastic when you’re out shopping. See a pair of shoes that you like? Google Lens will instantly identify them, (alongside providing you with similar items), and give you access to reviews where available, allowing you to quickly and easily make an informed decision before you head to the checkout.

And, it’s a total lifesaver when you’re traveling. You’ll find Google Lens is able to identify buildings and landmarks, pulling up directions for you in a split-second, alongside opening hours so you don’t make a wasted trip. It’s also brilliant with recognising restaurants and cafes, so you can read the reviews from across the street before you approach the door and get bowled over by eager wait staff!

Now that we’ve got you all excited, we know you’ll be eager to find out how to use Google Lens on your iPhone or iPad. Here’s everything you need to know to make installing this powerful tool a piece of cake…

Google Lens doesn’t have its own dedicated app on Apple’s App Store. Instead, its functionality is baked into two different Google apps. Which one is the best for you will depend on how you plan to use Google Lens and on which device.

The first option to use Google Lens on your iPhone or iPad is the Google app. This gives you access to a whole range of Google services on your iPhone, including personalized news stories, sports updates and weather info, as well as a full suite of Google search tools – including Google Lens.

Install the app and you’ll be able to use Google Lens with your camera in real time on iPhone (though not on iPad, sadly), as well as searching with images already saved to your camera roll. To get started, download the latest version of the Google app from the App Store.

Alternatively, you can install the Google Photos app instead. This is the best option for iPad. Google Photos is Google’s cloud photo backup service and it includes a whole host of neat features for editing and organizing your images online.

It also incorporates Google Lens: open any image from your camera roll in the Google Photos app and with just a tap you’ll be able to analyze it for information using Google Lens.

The key difference, though, is that Google Photos does not allow you to search in real-time with your iPhone or iPad camera. If that’s not a problem, though, just download the latest version of the Google Photos app from the App Store.

Both apps will request access to your photo library the first time you open them or try to use the Google Lens tool. It’s necessary to grant this so that Google can run your snaps through its servers. Even if you’re using Google Lens in real time, several of the features require you to shoot a still of your subject before the software is able to analyze it.

If you want to search in real-time using your iPhone, start by launching the Google app. From the app’s home screen, tap the camera icon to the right of the main search bar (this is sadly missing in the iPad version of the app). If it’s your first time using the app, you may be asked to grant Google permission to access your photos. You may also see a dialogue box explaining that Google Lens will continuously try to identify objects whenever it’s running.

With Google Lens open, you can swipe left and right to switch between the various modes, the names of which will appear along the bottom of your screen. Each label is relatively self-explanatory. Translate, for example, will allow you to translate writing from one language to another. Text lets you take a photo of text, which can then be read aloud to you or copied into a different app. Dining allows you take photo of food, for identification and recipe suggestions.

Once you’ve selected the relevant mode, simply aim your camera at the object which you’d like Google Lens to search with. White circles will appear across the screen as Google analyses the contents of the live image.

When it identifies an object in the frame, a larger white circle will appear over it. If it recognizes multiple objects, each will be marked with a white circle. To select the object you want to search with, just aim your camera at the appropriate circle until it turns blue. A message will appear which says ‘Tap the shutter button to search’.

Do as it says and Google will take a moment to communicate with its servers, before presenting you with a list of results tailored to the item detected and the mode you selected. Note that you’ll need an active Wi-Fi or mobile data connection for this process.

The image you shot will also remain on screen. If the object you selected could fit within different categories – say text, translation and homework – you can switch the search mode from this screen, by tapping the white button on the left containing three horizontal lines. The list of results below will update accordingly, without needing to take another photo.

Want to search with a different object from the same scene? As above, you don’t need to take another photo: just tap on one of the white circles within the image you already shot, to find out what Google Lens has identified. Or if you think there’s an object which Google missed, you can tap the white button with the magnifying glass on the right. This lets you give Google a helping hand by focusing in and reframing the search area around a specific object in the scene.

Sometimes you might need the skills of Google Lens at a later date. Say you spot a mysterious plant when you don’t have a strong data reception, or you take a photo of your food at dinner – but don’t want to antisocially search at the table. Don’t worry: you can easily use Google Lens to search with photos saved to your iPhone or iPad’s camera roll, any time.

There are two ways to search with snaps saved to your smartphone or tablet. If you’re using the Google app, start by tapping the camera icon next to the search bar on the home page. With Google Lens activated, tap the picture frame to the left of the shutter search button. This will bring up your photo library. Select any photo and Google will analyze it for objects.

Alternatively, you can do the same thing through the Google Photos app. Simply open the image you’d like to search with, then tap the Google Lens button at the bottom of the screen. It’s second from the right and looks like a partially framed circle. Hit this and Google will again analyze the image for any identifiable objects.

Whichever method you use, the next screen will be the same. Google will present a range of results relevant to what it detects in your chosen image. As above, you can change the search mode by tapping the button on the left, or re-frame the scene to zero-in on a different object using the button to the right. And again, if Google detects several objects in the scene, you can switch between them by tapping the white markers which label them.

Google Lens is generally very impressive when it comes to identifying objects and returning relevant results. From animals to plant varieties to delicious dishes, it can be scarily good at detecting and recognizing the subject of your snaps. But occasionally Google gets it wrong.

In low lighting, for example, or if the object in question has an undefined shape, Google can struggle to understand what it’s looking at. Likewise, even if Google Lens does recognize the object, the suggested search results sometimes aren’t the most useful – or accurate.

If you find that this is the case when using Google Lens on your iPhone or iPad, you can help to improve the tool by giving feedback. Scroll down to the bottom of the list of search results and you’ll see a query saying, “Did you find these results useful?” You can then tap ‘yes’ or ‘no’. The latter option will then allow you send feedback detailing your issues, which should help make performance better in future.

This article was first published in TechRadar in March 2022